Table of Contents [expand]

Last updated May 30, 2025

To provide more visibility into the Ruby runtime, the Ruby language metrics feature surfaces additional language-specific time series metrics within Application Metrics. Ruby metrics include free and allocated heap object counts and number of free memory slots.

This feature is currently in public beta.

Ruby language metrics are available for all Cedar generation dynos except for eco dynos.

JRuby Applications

If your app is in JRuby, you will not be able to use these metrics as your app is running on top of the Java Virtual Machine (JVM). You will, however, be able to export and view JVM metrics. For more information, see the JVM Runtime Metrics document.

General Information

For general information on metrics display settings, please refer to the language runtime metrics parent document.

The implementation uses barnes, a fork of Basecamp’s trashed, to report Ruby runtime metrics to Heroku’s systems.

Getting Started

First, enable the Language Metrics flag on your application. You can do this via the Dashboard or the CLI. To enable it through Dashboard, open the metrics preferences panel and turn on the both the Enhanced Language Metrics toggle and the Ruby Language Metrics toggle.

Alternatively, to enable it through the Heroku CLI:

$ heroku labs:enable "runtime-heroku-metrics" -a "my-app-name"

$ heroku labs:enable "ruby-language-metrics" -a "my-app-name"

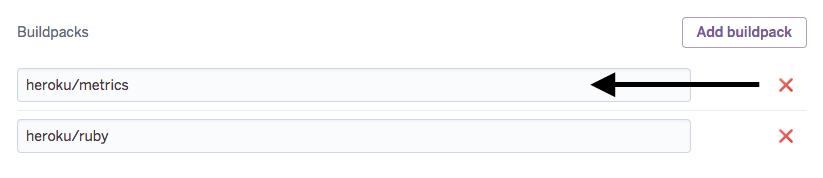

Add the metrics buildpack

The next step is to add the heroku/metrics buildpack to your application. This can be done through the CLI or the dashboard

Adding the buildpack via the CLI

To add the buildpack using the command line interface (CLI), run the following:

$ heroku buildpacks:add -i 1 heroku/metrics

If you are deploying using pipeline promotion you must add this buildpack to both your production and staging apps.

This process does not work with container based-applications because they do not use buildpacks. Currently container-based applications are not supported in this beta.

Adding the buildpack via the Dashboard

From the Settings tab for your app add the buildpack like so:

Add the Barnes gem to your application

The next step is to prepare the application to utilize the Barnes gem.

Add the barnes gem to the Gemfile:

gem "barnes"

Then run:

$ bundle install

If you’re using a forking web server, such as Puma, you’ll need to call Barnes.start in each worker. For Puma that looks like this:

require 'barnes'

before_fork do

# worker specific setup

Barnes.start # Must have enabled worker mode for this to block to be called

end

Note: This does not work unless you’re using workers. To get this to work you must have at least one worker

Commit & Push

To finish up commit the changes and push to Heroku like so:

$ git add -A .

$ git commit -am "Enable Heroku Ruby Metrics"

$ git push heroku main

Available Metrics

It may take a few minutes for these metrics to become available after the steps above are completed.

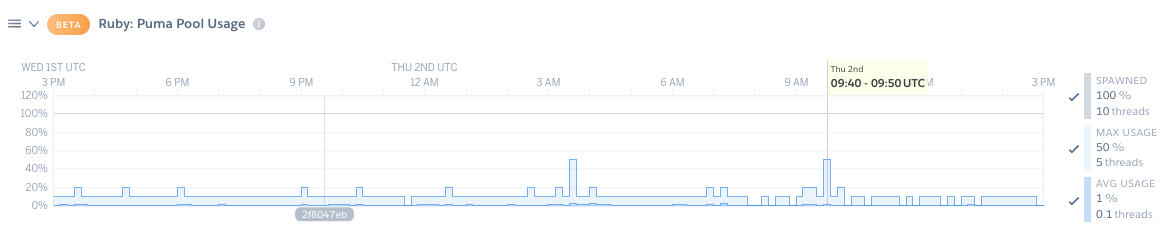

Puma Pool Usage

This metric should help you determine if your application is under or over-provisioned. The metric is provided as a percentage of your application’s request capacity. If the value is at, or close to 100%, that means any future requests will have to queue before your application can begin to process the request. This percentage is based on the number of Puma workers (processes) and max-threads per worker. A good value for this metric is to be at 80% or less. This range will allow your application to maintain some “burst” capacity. If your application is going over 80% then adding an extra dyno to your application will increase your capacity to take more requests which will in turn decrease this utilization metric.

Example: If an application has 2 puma worker processes and each process has 5 threads then the maximum spawned count would be 10 threads (2 processes x 5 threads). If this Heroku app has 10 dynos and half of them are serving 4 requests and half of them are serving 2 requests then the average usage number will be 3.

Besides increasing dyno count, adding extra workers or increasing the max-threads count will also decrease your utilization number since the maximum theoretical number of requests your application can process at a time is increased. Keep in mind that the dyno is limited by both memory and CPU. If you set the maximum number of threads to an arbitrarily high value, then it will appear that the application has plenty of capacity even though it cannot reasonably be expected to handle that many concurrent requests. A reasonable starting place is to use five threads per worker. You can increase your worker count until you are close to your 24-hour memory limit without going over.

In addition to showing the percentage of utilization, the number of spawned threads is also shown. If your application has set min-threads equal to max-threads, then this spawned value should always be at 100% as is seen in the above image.

Heroku recommends running at least two dynos to maintain dyno redundency.

To access this metric you will need Barnes gem >= 0.0.7 and Puma gem >= 3.12.0.

How to use this chart

When the pool capacity utilization consistently goes over 80%, your application is under-provisioned and could benefit from adding extra dynos to your web app. When you add a dyno then the same load will be spread across more instances and the metric should go down. Continue to add dynos until the application stabilizes at or below 80%. Running an application at around 80% utilization provides room to handle spikes of traffic.

If the pool capacity utilization is consistently at or under 20%, your application is over-provisioned and you should be able to remove dynos from your web app. When you remove a dyno the same load will be spread across fewer instances and the utilization metric should increase. It is worth noting that Heroku recommends running at least 2 dynos per application to provide resiliency to your application via dyno redundancy.

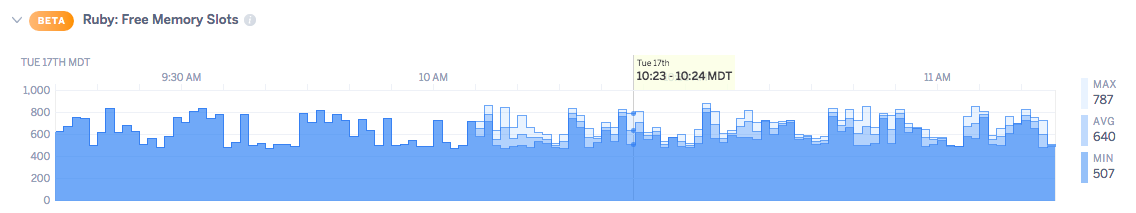

Free Memory Slots

The Free Memory Slots plot displays the minimum, maximum and average available free memory slots for the selected time interval. The most recent interval is shown as the default.

How to use this chart

Large numbers of free slots (for example more than 300,000) indicates that there is a controller action that is allocating large numbers of objects and freeing them. You can read more about this in a guide to GC.stat about the heap_free_slots metric.

When you see this you should reach for a performance analytics service such as Scout or Skylight that provides you with detailed information on object allocation. Alternatively, you can use Derailed Benchmarks to figure out where in your application is allocating so much memory.

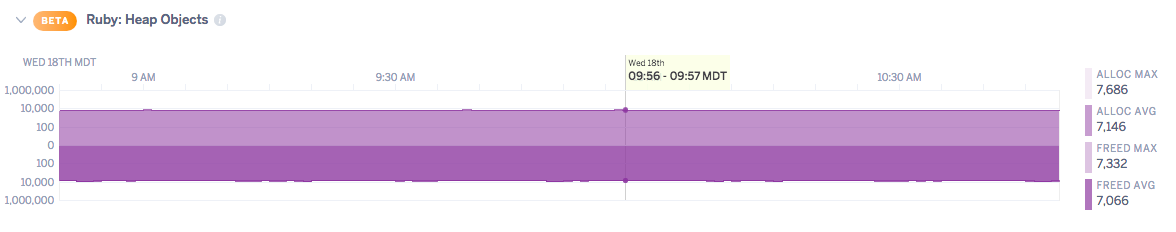

Heap Objects Count

Max and average allocated and freed heap object counts are displayed on a log scale, with freed shown as mirrored below the y-axis baseline. Metrics reflect the selected time interval, with the default being the most recent one.

How to use this chart

The allocated and freed object count should both grow at roughly the same rate. There will be slightly more objects allocated over time until your application approaches it’s memory “steady state”. If however, there are drastically more objects being allocated than being freed this could indicate that somewhere in your code you are retaining objects. You will need to use a tool such as memory-profiler or Derailed Benchmarks to help find the which objects are being retained.

Disabling Metrics Collection

To disable Ruby metrics collection, simply toggle off the Enhanced Language Metrics toggle via the Metrics Preferences panel, or using this CLI command:

$ heroku labs:disable "runtime-heroku-metrics" -a "my-app-name"

Debugging

If you’re not seeing metrics, here are some common things to check:

- Your app is using Puma

>= 3.12.0and Barnes>= 0.0.7 - The

Barnes.startis inside of abefore_forkblock () - Your app runs in cluster mode (more than 1 worker) and not single mode. Otherwise,

before_forkwill never fire. - The metrics buildpack has been added to your app and

- Labs flag is enabled

If all of these are correct but you’re still not seeing metrics you can check for debug output:

$ heroku run bash

$ BARNES_DEBUG=1 DYNO="web.1" bundle exec puma -p ${PORT:-3000}

Use the same command to boot your application as in your Procfile

Wait a few seconds after your application has booted and you should see debug output to standard out:

{"barnes.state":{"stopwatch":{"wall":1561579468665.421,"cpu":8387.169301},"ruby_gc":{"count":10,"heap_allocated_pages":2451,"heap_sorted_length":3768,"heap_allocatable_pages":1317,"heap_available_slots":999027,"heap_live_slots":930476,"heap_free_slots":68551,"heap_final_slots":0,"heap_marked_slots":921621,"heap_eden_pages":2451,"heap_tomb_pages":0,"total_allocated_pages":2451,"total_freed_pages":0,"total_allocated_objects":3068719,"total_freed_objects":2138243,"malloc_increase_bytes":2716264,"malloc_increase_bytes_limit":30330547,"minor_gc_count":6,"major_gc_count":4,"remembered_wb_unprotected_objects":11936,"remembered_wb_unprotected_objects_limit":23872,"old_objects":898986,"old_objects_limit":1797974,"oldmalloc_increase_bytes":23976536,"oldmalloc_increase_bytes_limit":58823529}},"barnes.counters":{"Time.wall":10096.75341796875,"Time.cpu":11.80766799999947,"Time.idle":10084.94574996875,"Time.pct.cpu":0.1169451952643102,"Time.pct.idle":99.88305480473569,"GC.count":0,"GC.major_count":0,"GC.minor_gc_count":0},"barnes.gauges":{"using.puma":1,"pool.capacity":40,"threads.max":40,"threads.spawned":40,"Objects.TOTAL":999027,"Objects.FREE":68593,"Objects.T_OBJECT":72287,"Objects.T_CLASS":25522,"Objects.T_MODULE":3083,"Objects.T_FLOAT":14,"Objects.T_STRING":401533,"Objects.T_REGEXP":3051,"Objects.T_ARRAY":78539,"Objects.T_HASH":44655,"Objects.T_STRUCT":2244,"Objects.T_BIGNUM":148,"Objects.T_FILE":13,"Objects.T_DATA":44890,"Objects.T_MATCH":78,"Objects.T_COMPLEX":1,"Objects.T_RATIONAL":765,"Objects.T_SYMBOL":2470,"Objects.T_IMEMO":241182,"Objects.T_ICLASS":9959,"GC.total_allocated_objects":9474.0,"GC.total_freed_objects":36.0,"GC.count":10,"GC.heap_allocated_pages":2451,"GC.heap_sorted_length":3768,"GC.heap_allocatable_pages":1317,"GC.heap_available_slots":999027,"GC.heap_live_slots":930476,"GC.heap_free_slots":68551,"GC.heap_final_slots":0,"GC.heap_marked_slots":921621,"GC.heap_eden_pages":2451,"GC.heap_tomb_pages":0,"GC.total_allocated_pages":2451,"GC.total_freed_pages":0,"GC.malloc_increase_bytes":2716264,"GC.malloc_increase_bytes_limit":30330547,"GC.minor_gc_count":6,"GC.major_gc_count":4,"GC.remembered_wb_unprotected_objects":11936,"GC.remembered_wb_unprotected_objects_limit":23872,"GC.old_objects":898986,"GC.old_objects_limit":1797974,"GC.oldmalloc_increase_bytes":23976536,"GC.oldmalloc_increase_bytes_limit":58823529}}

Make sure that there is a pool.capacity key in the output.

The metrics plugin relies on the PORT environment variable to be set correctly. Make sure that it is being read from the environment correctly:

$ heroku run bash

~$ PORT=12345 rails runner "puts 'port is ' + ENV['PORT']"

port is 12345

Some applications run behind a proxy script like bin/proxy bundle exec rails s that might modify the PORT environment variable. If your application runs behind a proxy that modifies PORT your proxy script should be modified to export the original port value.

For example if this is in your profile:

web: bin/proxy bundle exec rails s

Then you could modify it to record the original port:

web: PORT_NO_PROXY=$PORT bin/proxy bundle exec rails s

And in your application use this environment variable:

require 'statsd'

Barnes.start(statsd: Statsd.new('127.0.0.1', ENV["PORT_NO_PROXY"]))