Last updated December 03, 2024

Heroku Postgres users can run up against the connection limits of their chosen database plan. This results in connection request queueing that can degrade performance and reliability. Connection pooling with PgBouncer can solve this by sharing database server connections among clients.

This article:

- Provides an overview of how database connections and sessions work

- Details the different types of connection pooling available with PgBouncer

- Directs you in your implementation of connection pooling with PgBouncer on Heroku

Heroku Enterprise customers with Premier or Signature Success Plans can request in-depth guidance on this topic from the Customer Solutions Architecture (CSA) team. Learn more about Expert Coaching Sessions here or contact your Salesforce account executive.

Database Connections, Sessions, and Connection Pooling

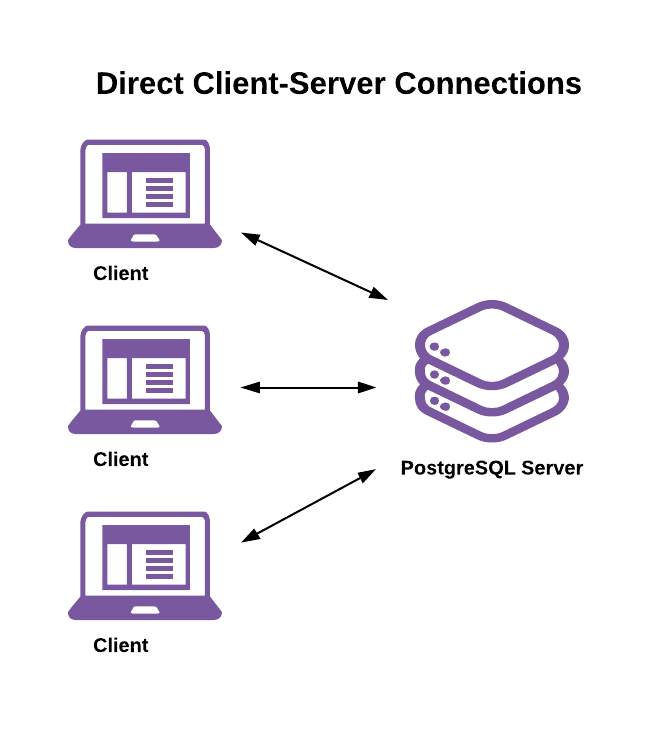

Connection pooling introduces important changes to how database connections and sessions work. To understand those changes, it’s important to first understand their normal behavior. Non-pooled connections follow a standard client-server connection architecture:

Here’s a high-level view of the PostgreSQL connection lifecycle without connection pooling:

- A client begins a new session by asking for and authenticating a connection to the server.

- The server forks a new system process to handle the connection and work session. The session’s state is initialized per a combination of server-level, database-level, and user-level configuration parameters.

- The client does as much work as it needs by executing one or more transactions. Examples include:

- Execute reads and writes against relations (tables, views, etc.)

- Use the SET command to change the session or transaction state

- Prepare and execute prepared statements

- The session ends when the client disconnects.

- The server destroys the process that handled the session.

A database session consists of all the work done over a single connection’s lifetime. Database sessions are of variable length in time and consume a variable amount of resources on both the client and server.

The key takeaways from this are:

- Creating, managing, and destroying connection processes takes time and consumes resources.

- As a server’s connection count grows, the resources needed to manage those connections also grow. Further, a server’s per-process memory usage continues to grow as clients do work on them.

- Since a single session only services a single client, clients can change the database session’s state and expect those changes to persist across successive transactions.

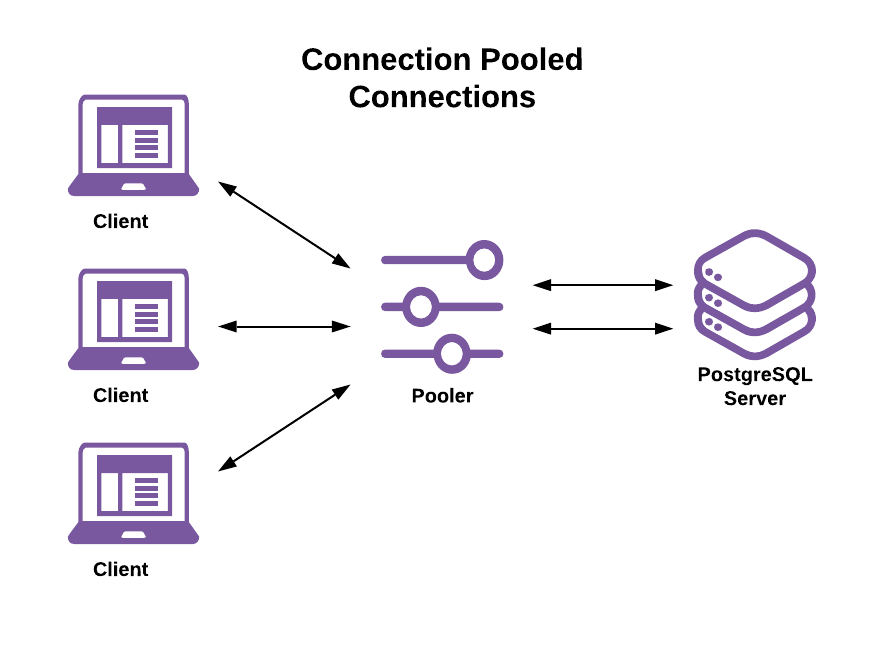

A connection pooler sits between clients and the server. Clients connect to the pooler and the pooler connects to the server. Introducing a connection pooler changes the connection model to a client-proxy-server architecture:

This decouples the client connection lifetime from the server connection and process lifetime. The connection pooler is now responsible for:

- Accepting and managing connections from the client

- Establishing and maintaining connections to the server

- Assigning server connections to client connections

This allows:

- A single-server connection to handle sessions, transactions, and statements from different clients

- A single client session’s transactions and/or statements to run on different server connections

In the rest of this article:

- “client connection” refers to a connection between a client and the connection pooler

- “server connection” refers to a connection between the connection pooler and server

PgBouncer’s Connection Pooling Modes

PgBouncer has three pooling modes available: transaction pooling, session pooling, and statement pooling. It’s important that you understand how each work. The pooling mode used:

- Determines how long a server connection stays assigned to a client connection.

- Imposes limitations on what a client can and can’t do, as described in the next sections.

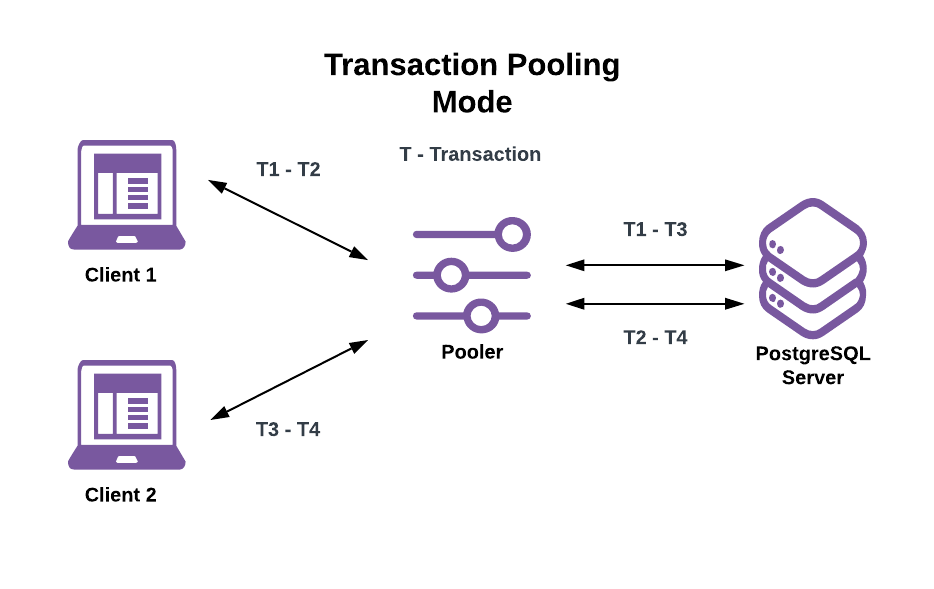

Transaction Pooling Mode (Recommended)

Database clients rarely execute consecutive transactions with no pauses between. Instead, non-database work is performed between transactions. This means that server connections spend a lot of time idle while waiting for new work to arrive.

Transaction pooling mode seeks to reduce server connection idle time like so:

- The pooler assigns a server connection to a client when it begins a transaction.

- The pooler releases the connection assignment as soon as the client’s transaction completes.

This means that:

- If a client runs more than one transaction, each can be executed on different server connections.

- A single-server connection can run transactions issued by different clients over its lifetime.

This allows for a far larger number of active clients than connections allowed by the server. While it is dependent on the given workload, it isn’t uncommon to see a 10x or more active client-connection to server-connection ratio.

This does come with an important caveat: Clients can no longer expect that changes made to database session state persist across successive transactions made by the same client, as those can run on different server connections. Furthermore, if a client makes session state changes they may, and likely will, affect other clients.

Here are some examples using the earlier transaction pooling example image:

- If Client 1 sets the session to read-only on the first server connection in T1 and Client 2’s T3 is a write transaction, then T3 fails since it runs on the now read-only server connection.

- If Client 1 runs

PREPARE a1 AS ...in T1 and then runsEXECUTE a1 ...in T2, then T2 fails because the prepared statement is local to the server connection T1 was run on. - If Client 2 creates a temporary table in T3 and attempts to use it in T4, then T4 fails because the temporary table is local to the server connection T3 was run on.

Transaction pooling mode benefits:

- Allow for more active clients than connections allowed by the server.

- Reduce server resources needed for a given number of clients.

Transaction pooling mode caveats:

- Any changes to session state via

SETmust only be made withSET LOCALso that the changes are scoped only to the currently executing transaction. Never useSET SESSIONorSETalone, which defaults toSET SESSIONwith transaction pooling. - Prepared statements can’t be used.

- When using temporary tables, they must be created, used, and dropped in the same transaction. Tip: Using

ON COMMIT DROPwhen creating temporary tables causes them to be automatically dropped when the creating transaction finishes. - You can’t pass certain connection parameters, including

optionsto PgBouncer.

For a full list of session state features and operations that aren’t supported when using transaction pooling see PgBouncer’s list.

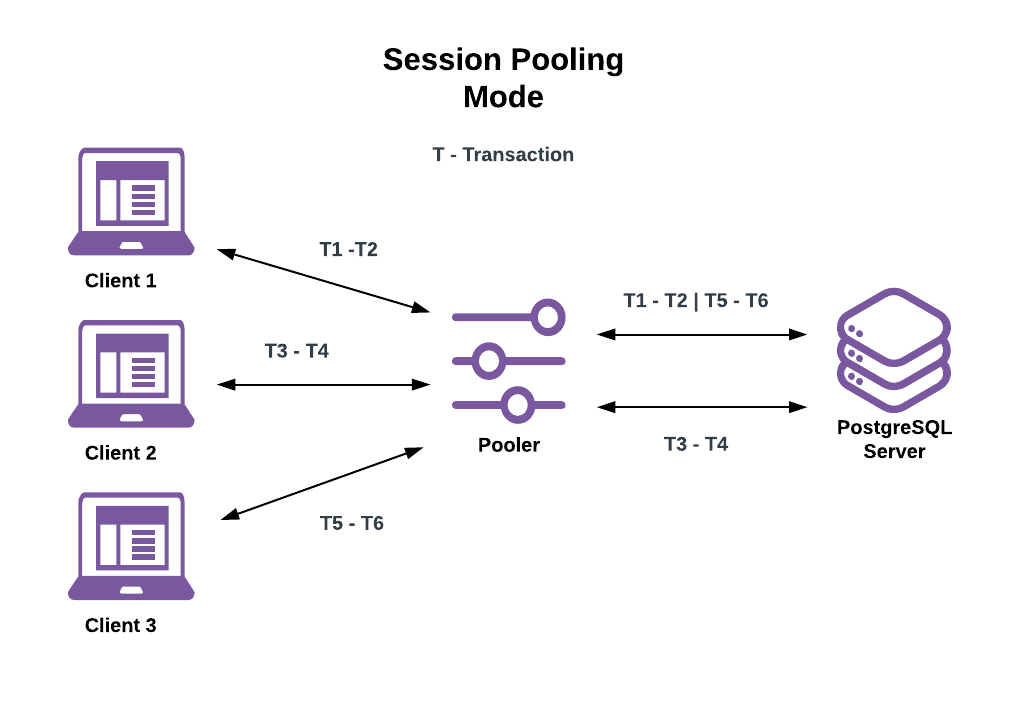

Session Pooling Mode

Here server connection assignments to clients last for the lifetime of the client connections. This seems the same as not using a connection pooler at all but there’s an important difference: server connections aren’t destroyed when an assigned client disconnects. When a client disconnects the pooler will:

- Clear any session state changes made by the client.

- Return the server connection to the pool for use by another client.

Session pooling mode benefits:

- Session pooling reduces time spent waiting for server to create new connection processes when clients connect.

- Many ORMs and application frameworks provide session pooling via their built-in connection pools (for example, Ruby on Rails).

Session pooling mode caveats:

- Because server connection assignments last for the lifetime of the assigned client connection, the number of active client connections is still limited by the server’s connection limit.

- Before using PgBouncer for session pooling be sure to check your chosen application framework and/or ORM to see if it has a session pool.

- You can’t pass certain connection parameters, including

optionsto PgBouncer.

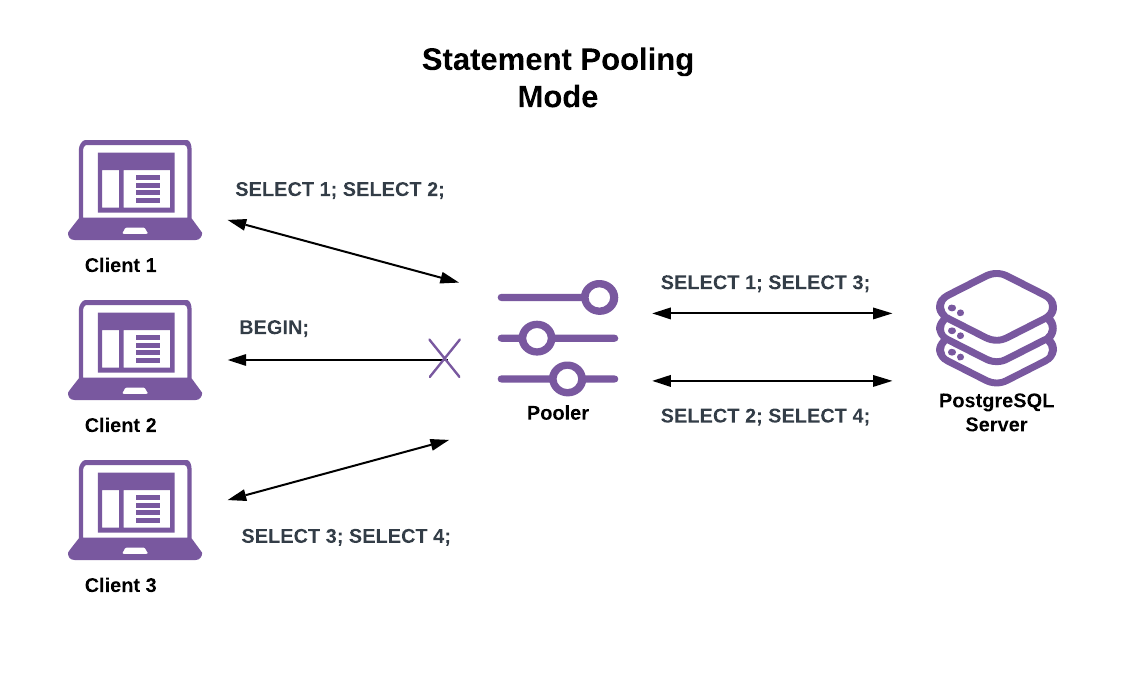

Statement Pooling Mode

Here server connections assignments last only for the duration of a single statement. This has the same session-state limitations as transaction pooling mode while also breaking transaction semantics.

This makes all client connections behave as if in “autocommit” mode. If a client attempts to begin a multi-statement transaction the pooler returns an error. While that is limiting, it allows for even higher active client connection counts than with transaction pooling. Good use cases include serving a large volume of simple key lookups or issuing single-statement writes.

Statement pooling mode benefits:

Allows for far higher active client connections than even transaction pooling mode.

Statement pooling mode caveats:

- Has the same session state restrictions as transaction mode pooling.

- Doesn’t allow multi-statement transactions

- You can’t pass certain connection parameters, including

optionsto PgBouncer.

PgBouncer on Heroku: Server-Side vs. Client-Side

There are two options for using PgBouncer on the Heroku platform: Server-Side or Client-Side. Here are the key features of each option:

Server-Side

- Runs locally on the Heroku Postgres servers

- Transaction pooling mode only

- Supports up to 10,000 client connections

- Doesn’t support user configuration changes

- Only supports use with the default Postgres Credential

- See documentation here for how to set up and use this option.

Client-Side Buildpack

The PgBouncer buildpack is a classic buildpack. There currently is no Cloud Native Buildpack version.

- Runs locally on each dyno type configured to use it (via your application’s Procfile)

- Allows configuration of PgBouncer’s most common configuration options via your application’s config variables

- Works with any number of Heroku Postgres credentials

- See documentation here for how to install and use the buildpack.

Choosing between Server-Side or Client-Side PgBouncer

While the lack of configuration with the Server-Side option seems limiting, it covers the most common use case of needing a simple-to-use transaction pooler. If at any point that becomes too restrictive, the Client-Side Buildpack can be used instead.

Consider the Server-Side option first if:

- Transaction pooling mode is exactly what you need.

- You only use the default Heroku Postgres credential.

Use the Client-Side option if:

- You want complete control and configurability of your connection pooling.

- You use more than just the default credential.

- You need either of the session or statement pooling modes.

Configuring the Client-Side Buildpack

Here’s a brief rundown of the primary configuration options that you want to consider changing from their default values.

A PgBouncer connection pool consists of all connections made by a single database user to a single database on a single host. For example, all connection made by ‘user1’ to ‘database1’ on ‘host1’ uses the same pool on a given PgBouncer instance.

PGBOUNCER_AUTH_TYPE (Default: scram-sha-256)

You can change to md5 or plain depending on server support.

PGBOUNCER_SERVER_TLS_SSLMODE (Default: require)

Connections between PgBouncer and PostgreSQL must go over TLS.

PGBOUNCER_POOL_MODE (Default: transaction)

Change this if you want to use session or statement pooling.

PGBOUNCER_URLS (Default: DATABASE_URL)

A (space separated) list of application config URLs to rewrite to point to the local PgBouncer instance on each dyno. This URL rewriting only affects the environment variable values on the running dynos, not your application’s config variables.

PGBOUNCER_MAX_CLIENT_CONN (Default: 100)

How many clients the pooler accepts across all managed pools before outright refusing them. When using transaction or statement pooling this can be much higher than the server connections limit, hence the high default.

PGBOUNCER_DEFAULT_POOL_SIZE (Default: 1)

The maximum number of server connections that can be assigned to a pool before using reserve connections (see below). Large, high-traffic applications serving concurrent requests per dyno increases this value.

Take care when setting this value to ensure that the total number of allowed server connections across all pools on all dynos doesn’t exceed the servers’ connection limit.

For example, if you plan to run 20 dynos with a single pool on each, then you must multiply this value by 20 to determine the maximum number of server connections that can be created across all 20 dynos. If your plan allows for 500 connections then you must keep this value at or under 25 (20 * 25 -> 500).

A note on using the Preboot feature with PgBouncer

Preboot works to minimize the traffic interruption effects of dyno restarts by booting their replacements before stopping the ones being replaced (dyno restarts are dyno replacements). If the total allowed server connections across all pools dynos are more than half of your Heroku Postgres plan’s connection limit and the new dynos quickly ramp up then you can run into issues with application requests stalling while waiting for database connections and must reduce the pool size to account for this.

PGBOUNCER_RESERVE_POOL_SIZE (Default: 1) and PGBOUNCER_RESERVE_POOL_TIMEOUT (Default: 5 seconds)

If clients are waiting for server connection assignments due to the given pool’s active server connections being currently maxed out (see: PGBOUNCER_DEFAULT_POOL_SIZE) allow up to PGBOUNCER_RESERVE_POOL_SIZE more connections after PGBOUNCER_RESERVE_POOL_TIMEOUT time spent waiting. The reserve pool is shared across all connection pools.

PGBOUNCER_SERVER_IDLE_TIMEOUT (Default: 10 seconds)

Any server connection that remains unused for longer than this will be closed. Setting this to 0 disables the idle timeout.

PGBOUNCER_SERVER_LIFETIME (Default: 3600 seconds)

Any unused server connection that has been alive for longer than this setting will be closed. Setting this to 0 doesn’t disable the setting, instead it makes each server connection a single-use connection.

PGBOUNCER_SERVER_RESET_QUERY (Default: "DISCARD ALL;" in session pooling mode, empty otherwise)

This is what the pooler uses to reset sessions after clients disconnect in session pooling mode. There’s no effective way to use this to get around the unsupported session state features when using transaction and statement pooling modes as detailed earlier in this article.

PGBOUNCER_QUERY_WAIT_TIMEOUT (Default: 120 seconds)

This determines how long the pooler allows a client waiting for a server connection assignment to wait before returning an error. If you suspect that clients are regularly waiting for server connections, dropping this to low values helps show that at the expense of those clients failing rather than eventually proceeding.